Mojtaba Valipour

PhD @ University of Waterloo and MBET @ Conrad School

I am building AGI

Interested?

Ping Me

I have a PhD in Computer Science from the University of Waterloo. During the PhD, Ali Ghodsi was my awesome supervisor which I had the chance to collaborate with him for quite a long time and learn a lot. I am always open to new opportunities. If you have an offer, don’t hesitate to send me an email.

I have a strong background in Machine Learning, I am a certified Self-Driving Car Engineer, and I am very much familiar with both Natural Language Processing (NLP) and Computer Vision (CV)’s most recent techniques in the literature. The PhD gave me strong insights as I worked on many different projects and I think I had the chance to learn a lot. Now I am ready to contribute on much larger more impactful projects.

I am a good enginner with good design skills that can lead any complex projects and can work with any person. I have an entrepreneurial mindset and I believe, it’s time to change the world!

If you want to find my most recent research ideas, and you are looking for collaboration, please feel free to sign-up at Daneshbaz.com and add your comments on the papers that interests you.

news

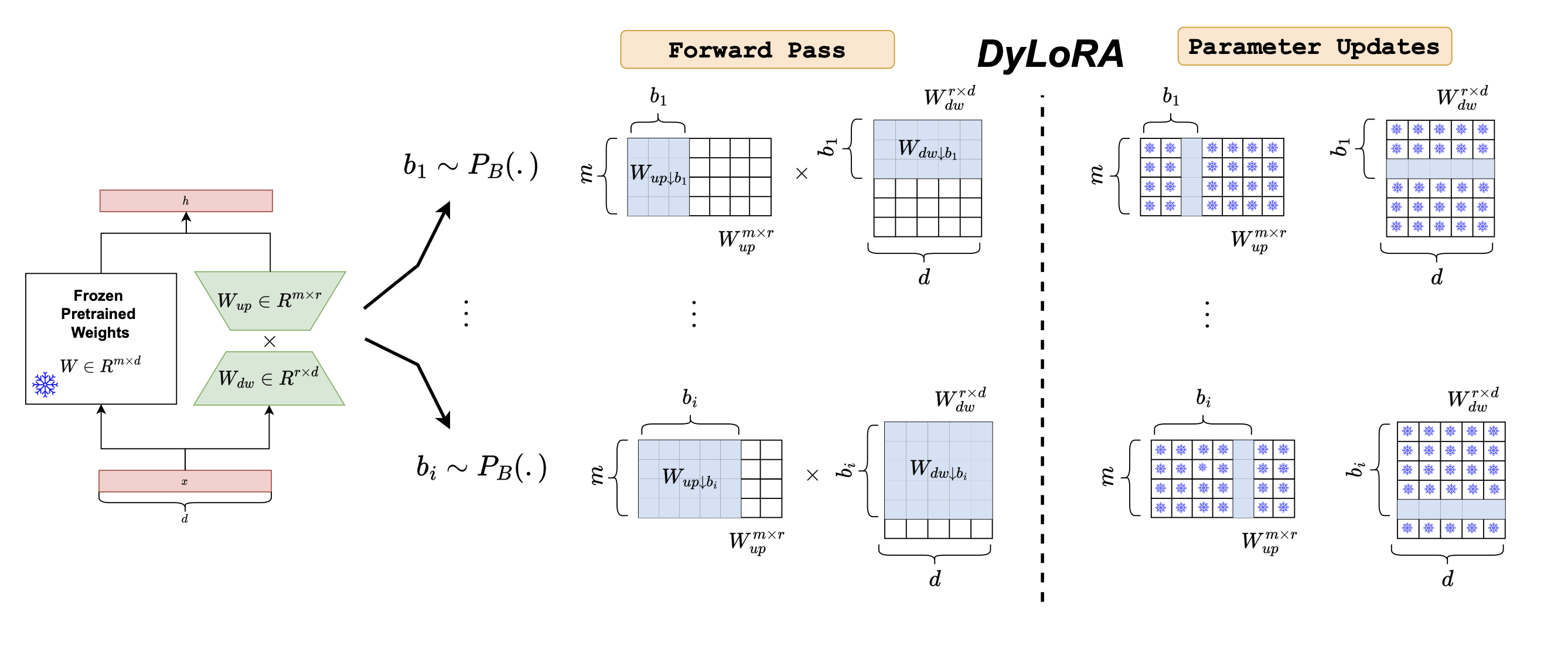

| Oct 10, 2024 | Our paper "QDyLoRA: Quantized Dynamic Low-Rank Adaptation for Efficient Large Language Model Tuning" has been accepted to be published at EMNLP 2024. |

|---|---|

| Jun 12, 2024 | Our paper “SeeFar: Satellite Agnostic Multi-Resolution Dataset for Geospatial Foundation Models” is now available in Arxiv and it is under review! SeeFAR Dataset. |

| Jun 11, 2024 | Our paper “Generative High Resolution Historical Satellite Images for Strategic Restoration of Ocean Ecosystems” has been accepted and will be presented in IEEE IGARSS 2024. |

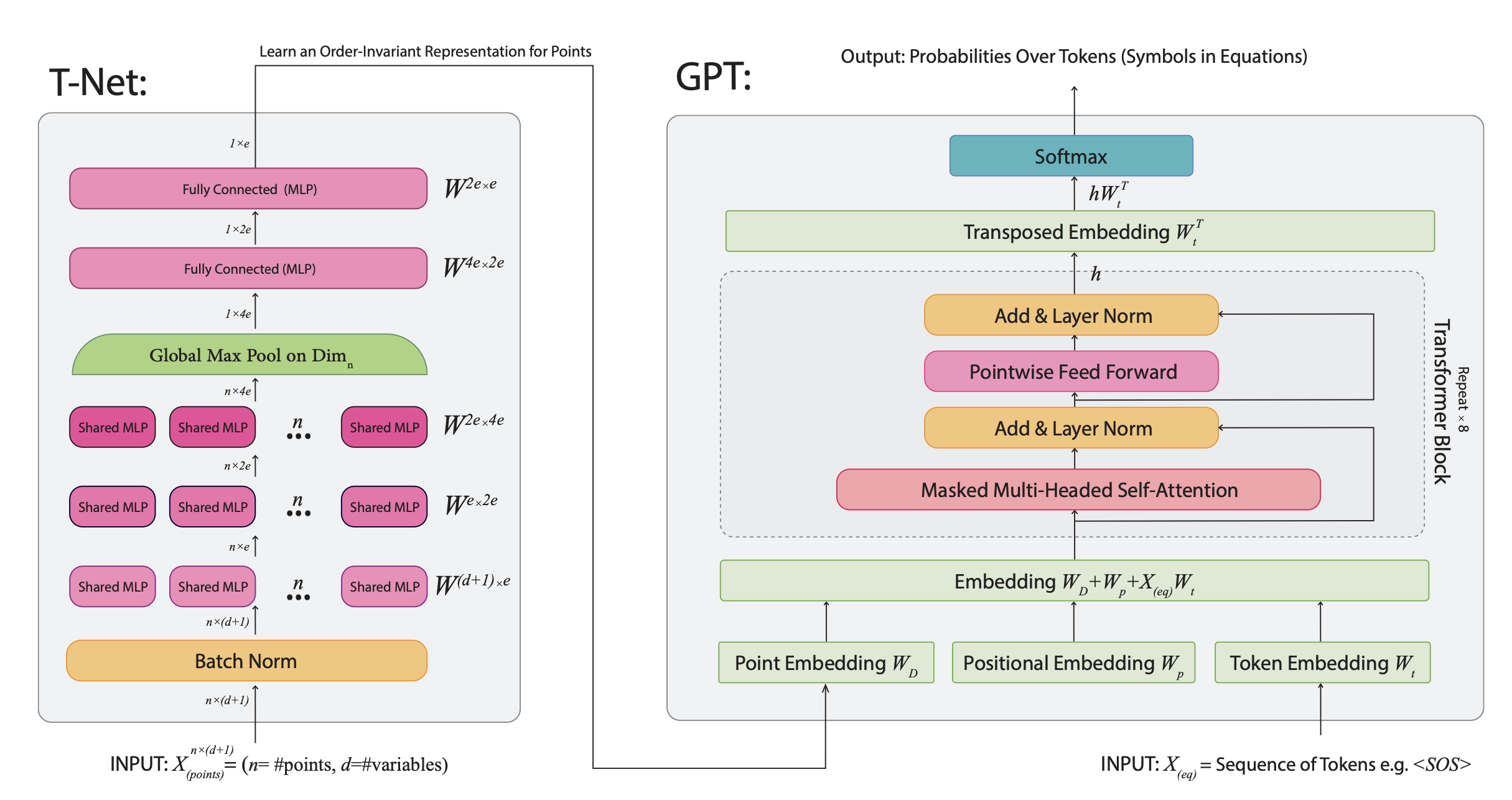

| May 30, 2024 | My PhD thesis “Symbolic Regression and Sequence Modelling with Conditional and Dynamic Language Models” is now available online. |

| May 10, 2024 | I successfully defended the “PhD” (Grade A, accepted unconditionally!) |

selected publications

-

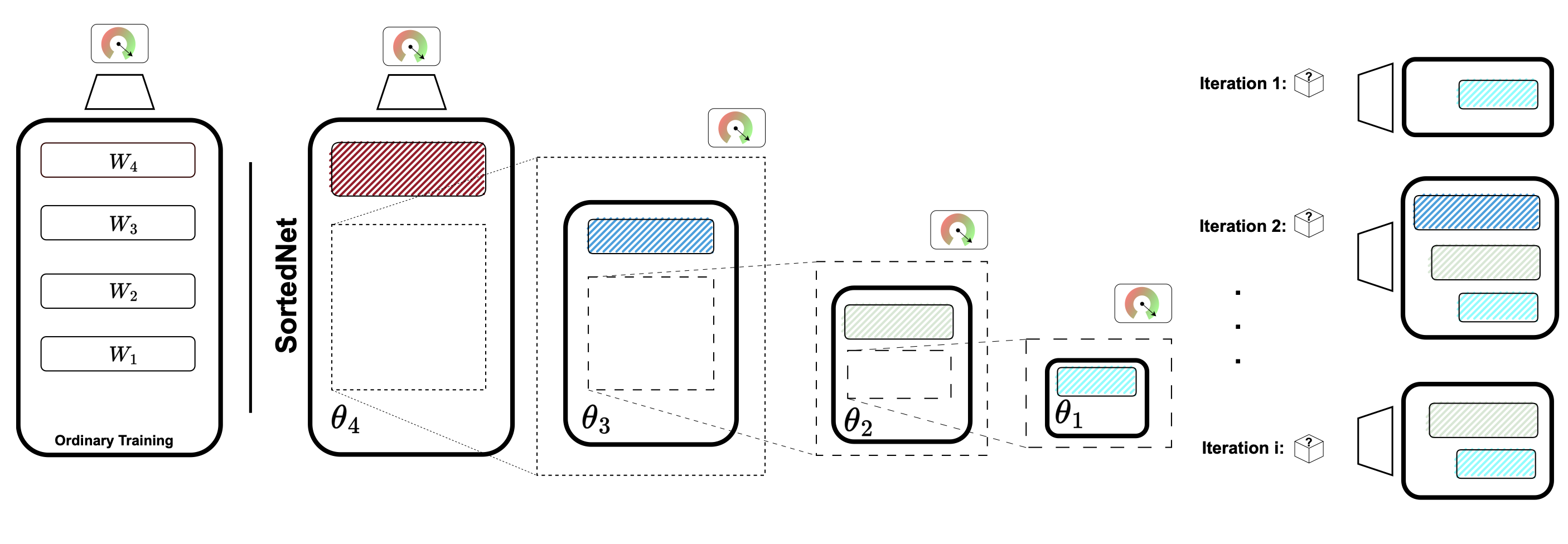

Sortednet, a place for every network and every network in its place: Towards a generalized solution for training many-in-one neural networksarXiv preprint arXiv:2309.00255, 2023

Sortednet, a place for every network and every network in its place: Towards a generalized solution for training many-in-one neural networksarXiv preprint arXiv:2309.00255, 2023 -

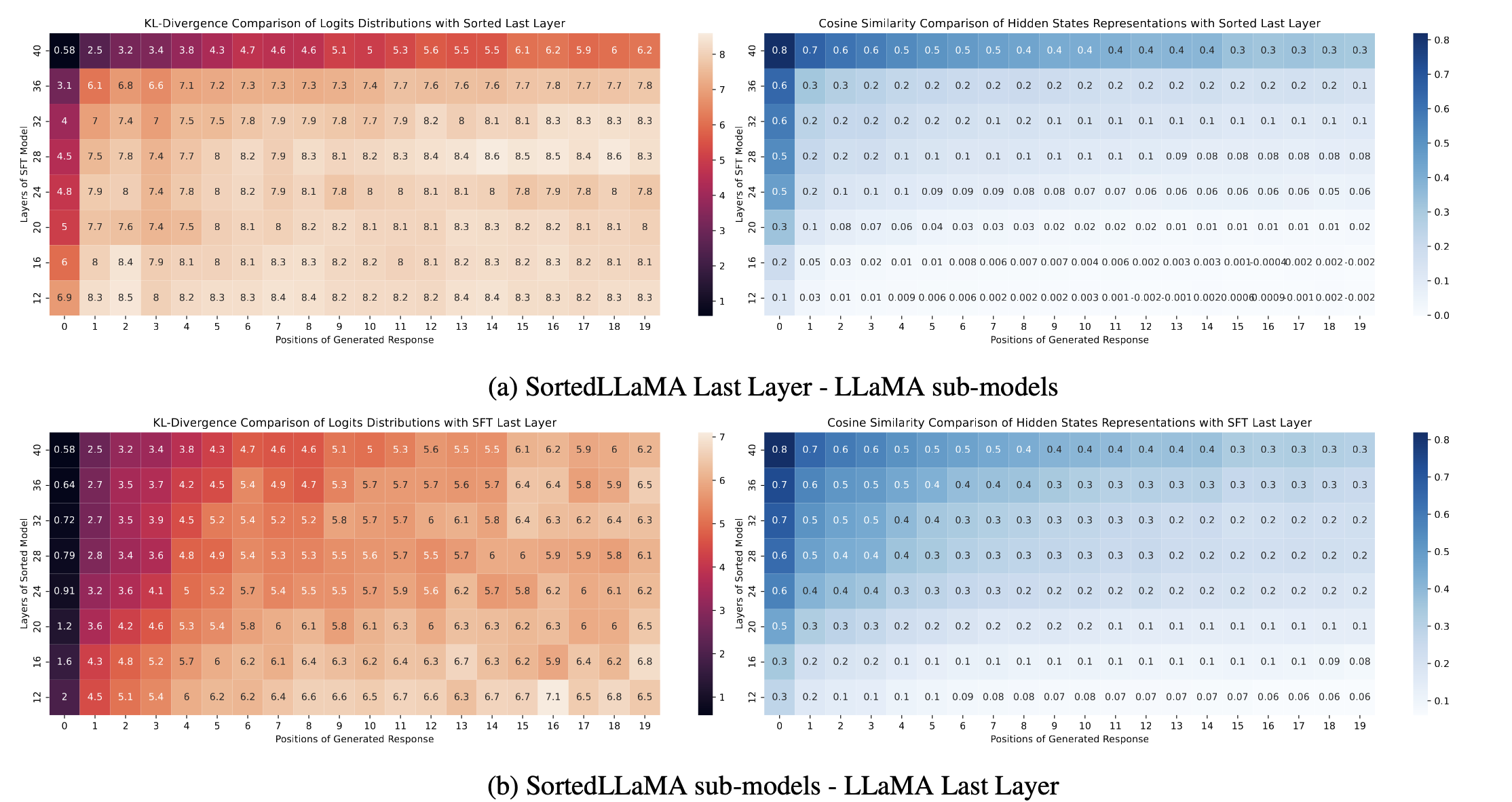

Sorted LLaMA: Unlocking the Potential of Intermediate Layers of Large Language Models for Dynamic Inference Using Sorted Fine-Tuning (SoFT)arXiv preprint arXiv:2309.08968, 2023

Sorted LLaMA: Unlocking the Potential of Intermediate Layers of Large Language Models for Dynamic Inference Using Sorted Fine-Tuning (SoFT)arXiv preprint arXiv:2309.08968, 2023